📖Thinking in Systems: A Primer

- authors

- Meadows, Donella

- year

- 2008

p.11

I have yet to see any problem, however complicated, which, when looked at in the right way, did not become still more complicated. —Poul Anderson

- System

- elements (usually influence behavior the least)

- usually, easily replaceable without changing systems’ behavior

- interconnections

- purpose or function (usually influences behavior the most)

- purpose or function is often hard to find. You need to look at systems’ behavior (not what systems says)

- elements (usually influence behavior the least)

- Systems cause their own behavior

- For large systems, purpose/function might come from purposes of smaller subsystems. Furthermore, the function of the final systems might turn out to be something no of its subsystems wants.

- System may change all its elements, but as long as it maintains all interconnections, it is the same system. (p.16)

- (Inherent Existence)

- Over time both elements and interconnections are likely to change

- Stocks

- “a stock is the memory of the history of changing flows within the system” (p.18)

- “A stock takes time to change, because flows take time to flow” (p.23)

- (game based on stocks and systems?)

- Stocks allow inflows and outflows to be imbalanced for a while (indpependent) (p.24)

- When there is a need to increase stock, people think first about increasing inflow, but limiting outflow might be more effective.

- Feedback loops:

- balancing (B)

- reinforcing (R)

- Time to double stock is approx 70/interest-rate (p.33)

- e.g., to double money under 7% interest rate, you need 70/7 = 10 years)

p.34

If A causes B, is it possible that B also causes A?

- Feedback loop take some time to register and cannot react instantly (p.39)

- That’s why many economical models work differently in real world

- supply-demand?

- That’s why many economical models work differently in real world

- A delay in balancing feedback loop makes system likely to oscillate (p.53-54)

- Changing delays can produce dramatic changes to the behavior (p.57)

- All exponentially growing systems have at least one balancing loop (counteracting growth). because no physical system can grow forever (p.59)

- (doesn’t universe grow forever?)

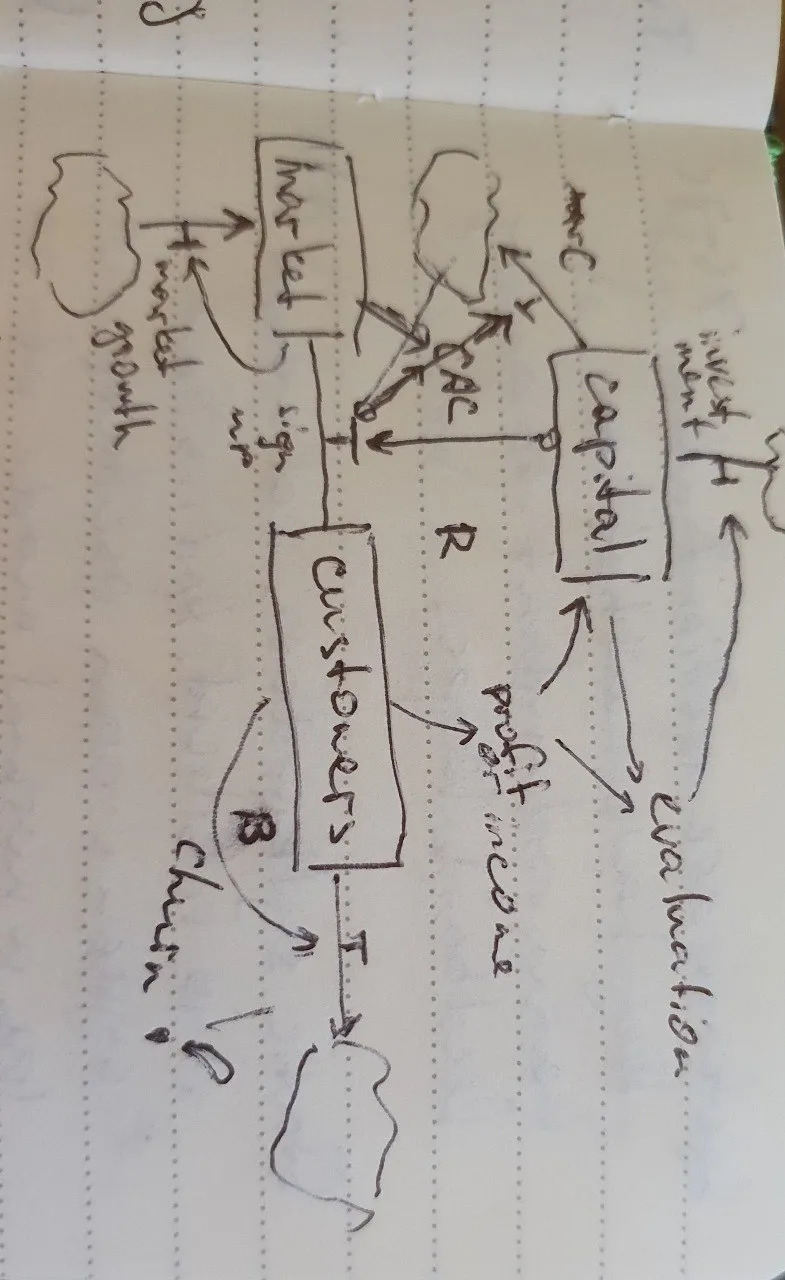

(Startups depleting market)

- even though market is a non-renewable resource, customers continue to bring profits, so the growth stops at a certain point when CAC~LTV and capital is depleted

- how churn rate plays here?

- resilience

- systems are resilient when they have many redundant balancing feedback loops (p.75-76)

- static system does not mean it is resilient. And resilient systems can oscillate (as a normal mode of operation) → Resilient system does not have to be static

- resilience is hard to spot and is often sacrificed for stability or high yield

- hierarchy

- hierarchical systems emerge from bottom-up. the purpose of larger systems is to serve its subsystems

- when subsystem pursuits its goals disregarding the goals of system, that is called suboptimization.

- too tight control (from higher system) can be devastating (if subsystems are not able to sustain themselves as a result)

- there are no real boundaries in the systems. all boundaries are mental construct.

- if you forget about that, the boundaries (clouds) will surprise you (p.96-97) → Systems’ boundaries are imaginary

- system thinkers might fall into the trap of defining boundaries too broadly (p.98) → Systems Thinking: Do not overinclude

- Shifting limits. A single input that is too low will limit the whole system. You often need to balance inputs, and you need to detect limiting inputs, so you know what to focus on. (p.100-102)

- Bounded rationality (Herbert Simon)—people make rational decisions, but information they have is too limited. That leads to unforeseen and unexpected consequences. (p.106) → Bounded rationality

- information is often incomplete and delayed

- furthermore, responses are delayed, too

- System traps (and opportunities)

- Policy resistance. If subsystems have conflicting goals, when one makes progress, the other starts pulling harder in the opposite direction.

- The more effective the policy, the harder the pull back.

- The solution is to let go (e.g., legalize), or reformat and focus on a common goal

- Tragedy of the commons. This occurs when there is a common resource everyone can benefit from. However, penalty from abusing it is shared by all actors. → Tragedy of the commons This leads to a system where each actor is rewarded for using resource more, until resource is exhausted.

- Can be fixed through education, privatization (so everyone deals with full consequences of their action), or regulation (law, mutual coercion)

- Drift to low performance (or eroding goals, boiled frog syndrome). When perceived state of the system is worse than it actually is, the desired state (goal) can be decreased. This leads to less corrective actions, which leads to further state decrease, and further goal erosion.

- do not allow goals depend on past performance

- or better yet, set goals from best past results (to reinforce positive loop)

- Escalation. When one stock tries to surpass the other and vice versa. Leads to a reinforcing loop increasing both stocks and ends in a collapse of one. (pp. 124-126) (examples are arm races, PR, ads)

- can be escaped if one refuses to compete (and can survive short-term advantage of the other)

- or agree on mutual disarmament

Success to the successful (Competitive exclusion). When winning the competition provides ways to play more efficiently. In that case, winner grows until all competition is extinct. (pp.126-130) → Success to the successful Ways out:

- diversify (choose another market)

- antitrust law

- rewards that do not help competing

- policies that level the field (e.g., heavier taxation for rich, charities, gifts, etc.)

(related to We can’t all be millionaires)

- Shifting the burden to intervenor (addiction, dependence). When intervention does not fix the problem but rather hides it (and makes it worse), the system decreases its own mechanism for dealing with issue, which leads to further dependence on the intervention.

- way out: build system mechanism to deal with issue and bite the bullet

- Rule beating. Following the rule, but not the spirit. Way out: (re)design rules to take into account more of the system (including rule beating) (p.136-137)

- Seeking the wrong goal. If goal does not reflect the desired result directly, the system will satisfy the goal, but that is not what anyone wants. → Seeking the wrong goal

- Especially, beware of confusing result and effort. Otherwise, the system will produce effort and no results.

- Policy resistance. If subsystems have conflicting goals, when one makes progress, the other starts pulling harder in the opposite direction.

- Leverage points:

- 12. Numbers—constants/parameters

- easy to change but usually do not produce drastic long-term results unless they trigger other leverage points

- 11. Buffer

- Usually, hard to change

- 10. Stock-and-flow structures

- 9. Delays

- 8. Balancing feedback loops (feedback strength / impact to correct)

- 7. Reinforcing feedback loops

- 6. Information flows

- 5. Rules—incentives, punishment, constraints

- 4. Self-organization—power to change system structure

- 3. Goals

- 2. Paradigms—mindset of which goals and everything above arises

- 1. Transcending paradigms (p.164)

- To see the world as a game (there is no true paradigm)

- Related to enlightenment

- 12. Numbers—constants/parameters

- The higher the leverage point, the stronger the system will resist change. i.e., knowing leverage points won’t make you superhuman (p.165)

- Mastery is not pushing leverage points, but dancing with the system (p.165)

- Systems are too complex beasts to predict or control. System thinking allows you to understand systems in the most general way, but does not allow you to predict and control the future

- but systems can be designed and redesigned to handle future events

System wisdoms:

- Before changing the system, study it. See how it behaves, try to get data (not memories), look at history (p.171)

- Expose your mental models to the day light (p.172)

- Putting your models in writing (or diagrams) makes you see gaps and mistakes

- architecture?

- Putting your models in writing (or diagrams) makes you see gaps and mistakes

- Honor, respect, and distribute information (p.173)

- Be precise about your use of language (p.174)

- We see only what we talk about (p.174)

- introduce systems terms in you vocabulary

- Pay attention to what is not measurable (quality), not only what is measurable (p.176)

- There are feedback loops that regulate feedback loops (i.e., system learning and changing itself). Design policies for system-changing feedback loops (or design these loops)

- “Intrinsic responsibility” results when the system provides direct and immediate feedback about the consequences to decision makers.

- (management)

- in systems sense, there is no short- long-term distinction. All-term effects are nested. Each action has an immediate result and one that radiates for decades (p.183)

- Cross discipline borders (p.183)

Rest

- Donella Meadows also wrote The Limits to Growth (http://www.donellameadows.org/wp-content/userfiles/Limits-to-Growth-digital-scan-version.pdf)